Naïve Information Aggregation In Human Social Learning Cognition, 2023

- Jan-Philipp Fränken Stanford University

- Simon Valentin The University of Edinburgh

- Christopher G. Lucas The University of Edinburgh

- Neil R. Bramley The University of Edinburgh

Abstract

To glean accurate information from social networks, people should distinguish evidence from hearsay. For example, when testimony depends on others' beliefs as much as on first-hand information, there is a danger of evidence becoming inflated or ignored as it passes from person to person. We compare human inferences with an idealized rational account that anticipates and adjusts for these dependencies by evaluating peers' communications with respect to the underlying communication pathways. We report on three multi-player experiments examining the dynamics of both mixed human--artificial and all-human social networks. Our analyses suggest that most human inferences are best described by a naïve learning account that is insensitive to known or inferred dependencies between network peers. Consequently, we find that simulated social learners that assume their peers behave rationally make systematic judgement errors when reasoning on the basis of actual human communications. We suggest human groups learn collectively through naïve signalling and aggregation that is computationally efficient and surprisingly robust. Overall, our results challenge the idea that everyday social inference is well captured by idealized rational accounts and provide insight into the conditions under which collective wisdom can emerge from social interactions.

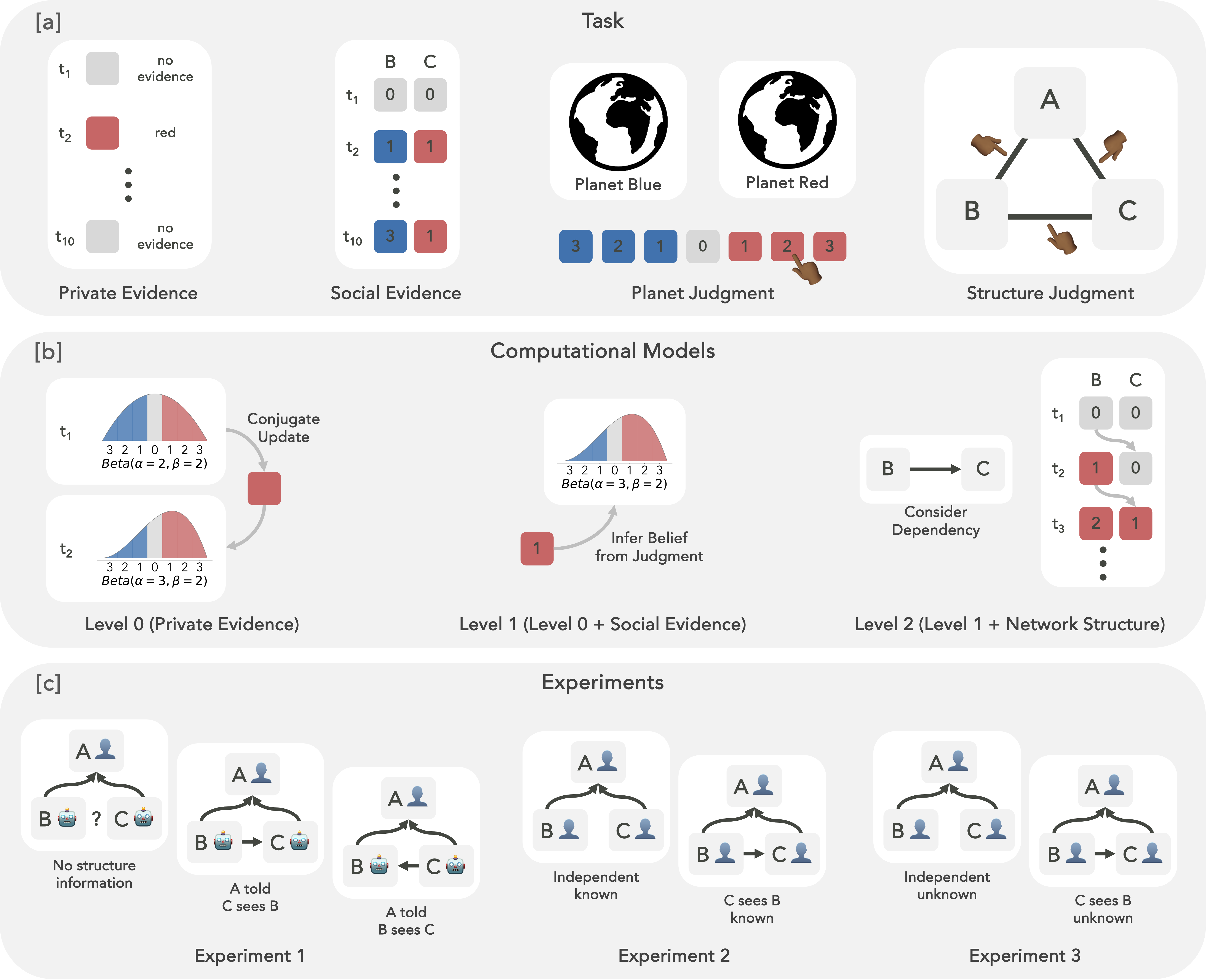

Figure 1. High-level overview of the paper. [a] Task illustration from the perspective of agent A (the focal participant across experiments). Over the course of ten trials, participants reason about their un- known location in space, i.e., whether they are on Planet Blue or Planet Red. Each planet has a different proportion of red and blue fish. On Planet Blue, 32 of the fish are blue and 13 are red. On Planet Red, the proportions are reversed. These proportions are known to participants. At each trial t, participants sam- ple private evidence from the unknown planet ∈ {blue fish: , red fish: , no evidence: } and observe social evidence corresponding to the previous judgments provided by two other agents (agents B and C). Participants then provide judgments about the planet they are on using a seven-point scale, ranging from 3–blue (highly confident planet blue) to 3–red (highly confident planet red), as well as a structure judgment about the communication structure between all three agents. Participants know that other agents’ judgments are elicited using the same seven-point scale and that all are incentivised to be correct. [b] Illustration of computational models. The baseline model (Level-0) uses a beta-binomial model to up- date its beliefs strictly from private evidence. The naïve social learning model (Level-1) aggregates other agents’ judgments and inverts a generative model of the observed judgments to infer the underlying belief (and thereby the evidence), followed by beta-binomial updates combining both observed private evidence and other agents’ inferred private evidence. The idealized rational model (Level-2) further conditions its inferences about the unknown private evidence on the communication structure (i.e., it corrects for dependencies in evidence), followed by the same beta-binomial updates as the Level-1 model. [c] Ex- perimental setup. In Experiment 1, participants (agent A) interact with two idealized (artificial) agents whose judgments were fixed across conditions. Between conditions, we manipulate whether participants (agent A) receive no structure information, are told that C could see B’s judgments or the reverse. In Experiments 2–3, the focal participant (agent A) interacts with two other human participants (B & C) and the actual network structure is manipulated across two conditions. Participants are informed about the network structure in Experiment 2 but must infer it in Experiment 3.